As chat bots and other conversational UIs begin to creep into our daily interactions, a few design patterns have begun to emerge. It’s still a very new and rapidly evolving field, and while the jury is still out on best practices, there are some emerging trends around these text based conversational UIs.

Suggested, contextual replies

Often times the system knows the basic responses that one might give and can surface suggested replies, eliminating the need to type it out manually. This contextually aware, anticipatory UI feature saves time and energy.

Inbox by Google

Inbox by Google uses its powerful artificial neural net to learn how a user communicates, then suggests short pre-written responses to received emails.

Yala Slack Bot

Yala, the social media manager bot for Slack, offers a few options at the beginning of an interaction. There is no need to remember the commands or options. It offers the two most likely tasks and gives the user an option to see more.

Delayed responses

When texting a friend, the recipient can’t respond instantly to every message. It’s not possible. They have to read the message, process it, think of a response, type it out, and tap send. Even the fastest response to a basic question would still take a few seconds. Though bots might not need that time to process, intentionally delaying the system response to a user input makes it feel a bit more human.

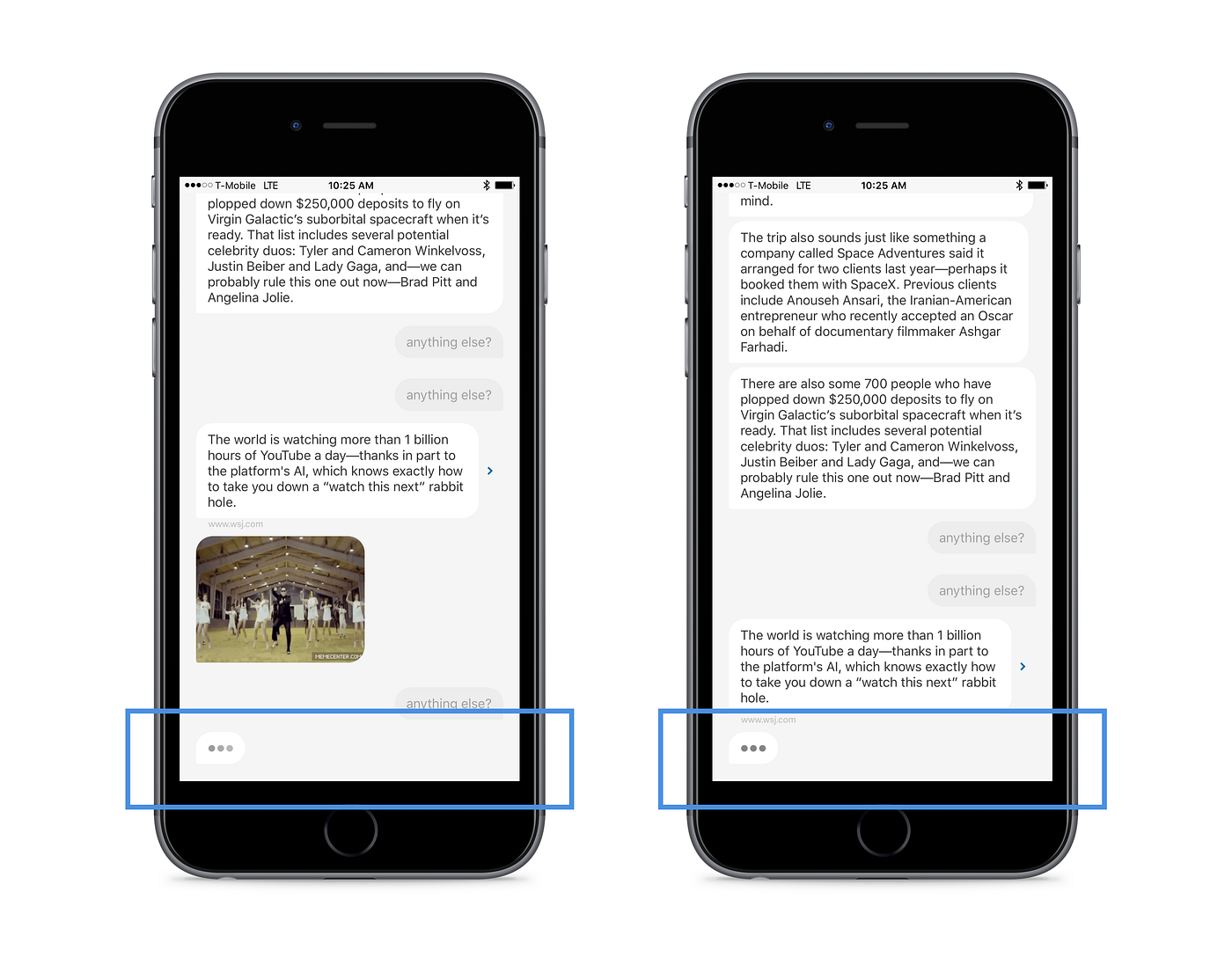

Quartz

Quartz’s news app shows the “typing” animation as it loads additional content. It’s likely not technically necessary because the content should already be loaded in their CMS and sequenced. Instead, they add this animation to make it feel as though a friend is sending you these stories instead of a server.

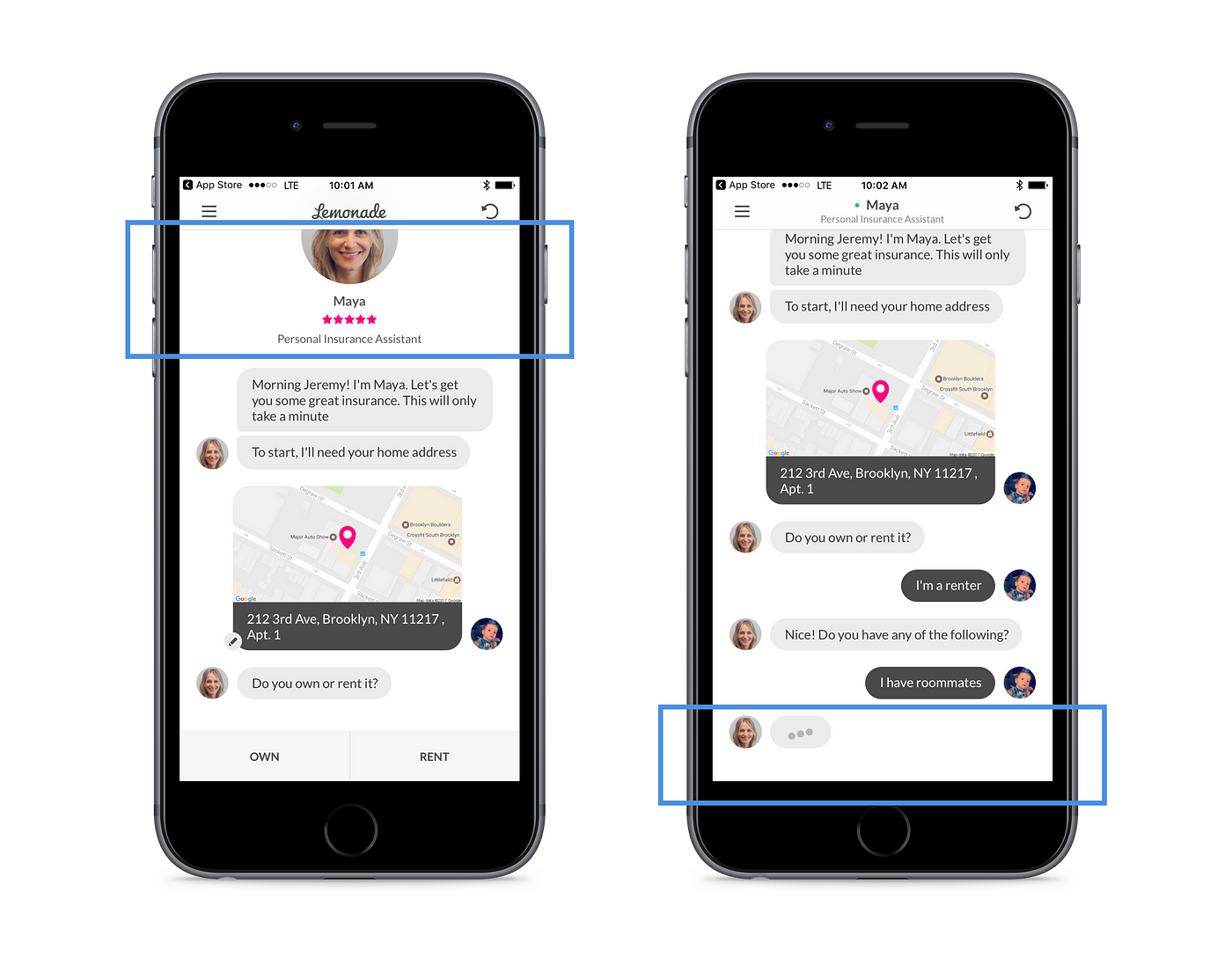

Lemonade

Lemonade goes as far as to imply there is actually a person on other side of the chat, in this case “Maya,” who even has a 5-star rating. When you reply to “her,” it looks like “she” is typing a response.

Batching responses

Many chat bots divide up otherwise long responses into a few smaller messages. After all, “SMS” stands for “Short Message Service,” and was originally limited to 160 characters. That was the first conversational UI that many people experienced, and is what people are used to in this context.

TechCrunch Messenger Bot

Techcrunch’s Messenger Bot sends burst of short messages versus one long message, to replicate how friends would communicate inside messaging apps.

Kia Niro Messenger Bot

Kia’s Niro bot continues this pattern, even dropping in an emoji between text content to keep it personable.

Setting Expectations

One of the challenges with bots that are designed to feel human is that people might think they are talking to a real person. They might then begin asking the bot nuanced questions that a human would have no trouble answering, but a bot might would. The user ends up frustrated and gives up on the experience. To combat this, a bot can introduce itself at the beginning of the interaction, and clearly spell out what it can do for the user.

Hipmunk Messenger Bot

When beginning a chat conversation with the Hipmunk Messenger Bot, it communicates what it can be asked. And it’s a good thing it does because when it encounters a different input (in this case to rent a car), it replied incorrectly, instead prompting for flight details. This might be a far better interaction with an error message, but it’s a start.

Lyft

The Lyft Messenger Bot has a similar issue. It initially presents the user with its only function (book a ride) and even a button to do it. Entering a different message triggers an error. That said, it’s unclear why this error message isn’t delivered by the Bot.

So…

It appears the macro-trend here is that the current bots are being designed to feel like the user is interacting with a person, not a computer. It will be interesting to see if this trend continues, or if “human-like” bot design is simply an onboarding mechanism into conversational UI in the same way skeuomorphism was into touch UI. And perhaps, like touch UI, the design language will evolve once users develop a fluency. It will be exciting to find out.

Have you noticed any other patterns I have missed? Disagree with me? Please let me know in the comments.